BoscoR: Extending R from the desktop to the Grid - accepted at SC'14

A pleasant surprise: The paper BoscoR: Extending R from the desktop to the Grid has been accepted to 7th Workshop on Many-Task Computing on Clouds, Grids, and Supercomputers (MTAGS) 2014 to be held at SC'14 in New Orleans, November 16 to 21, 2014

I am one of six authors, - Derek Weitzel is the lead. Dan Fraser, Marco Mambelli, Jaime Frey and David Swanson are the others

We did focus on end users, A key design goal was to have a flat learning curve for any laptop R user who tries BoscoR. We decided to do an evaluative analysis of R researchers, in addition to the quantitative analysis. Otherwise we risked to have an almost perfect software, that nobody uses.

In High Performance Computing (HPC), ease of use is not usually the main goal. I want to thank the peer reviewers who accepted this new, gate opener focus in high performance / throughput computing, We care about users unfamiliar with grid, clusters, clouds and dynamic data centers. We want them to be productive, while enjoying and creating new habits with our high throughput computing applications.

This explosion of R usage made headlines even in New York Times, Data Analysts Captivated by R’s Power. Here is an interesting quote

This is where technology transforms into sentiments in people When I drove a Tesla car, all I felt was a driver exhilaration and forgot about all the technical marvels inside

This Campus Factory lead to the idea of Bosco, designed to be run without administrator intervention. It automatically installs the Bosco client on a remote cluster. SSH was chosen as the protocol since it is used nearly universally for cluster access. Jobs are submitted to Bosco, which then submits over ssh to the remote cluster. Input files are transferred over the SSH connection as well. Bosco then monitors and reports the status of the job on the remote cluster as idle, running, or completed. Once the job is completed, Bosco will transfer output files back to the submit host.

This is great, but Bosco at the beginning aimed to be coupled with any application. We did customer interviews face to face and we noted that researchers do not want to access command lines. They looked straight in our eye and said clearly through words and body language: "We want to use the same graphical user interfaces we are accustomed to use in our day to day work".

Looking at various applications, we discovered R. It is open source and has an estimated two millions plus users. Another author, Dan Fraser traveled to Spain at the R-users conference and discovered an unusual interest for a Bosco version specially made for R users.

We combined Bosco with another module that we updated, called GridR

We explain this in the paper:

I am one of six authors, - Derek Weitzel is the lead. Dan Fraser, Marco Mambelli, Jaime Frey and David Swanson are the others

We did focus on end users, A key design goal was to have a flat learning curve for any laptop R user who tries BoscoR. We decided to do an evaluative analysis of R researchers, in addition to the quantitative analysis. Otherwise we risked to have an almost perfect software, that nobody uses.

In High Performance Computing (HPC), ease of use is not usually the main goal. I want to thank the peer reviewers who accepted this new, gate opener focus in high performance / throughput computing, We care about users unfamiliar with grid, clusters, clouds and dynamic data centers. We want them to be productive, while enjoying and creating new habits with our high throughput computing applications.

What is R project for Statistical Computing?

The R is a free software environment for statistical computing and graphics. It compiles and runs on a wide variety of UNIX platforms, Windows and MacOS. Quoting from our paper's abstract, BoscoR... is a framework to execute R functions on remote resources from the desktop using Bosco. The R language is attractive to researchers because of its high level programming constructs which lower the barrier of entry for use. As the use of the R programming language in HPC and High Throughput Computing (HTC) has grown, so too has the need for parallel libraries in order to utilize computing resources.

|

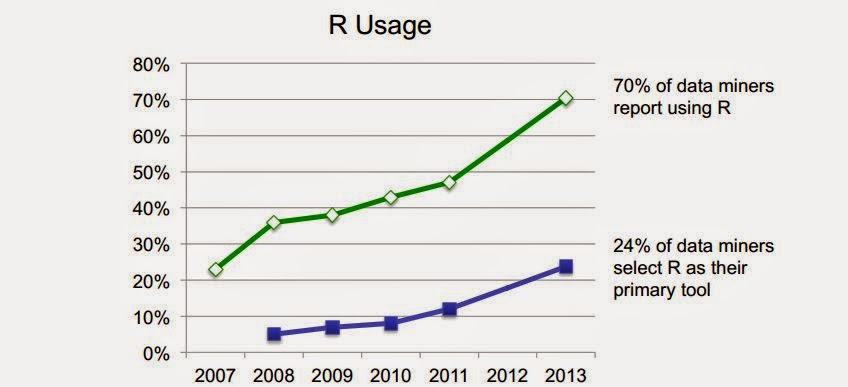

| Fig. 1 Rexer Analytics reports explosive usage of R among data miners |

R first appeared in 1996, when the statistics professors Ross Ihaka and Robert Gentleman of the University of Auckland in New Zealand released the code as a free software package.According to them, the notion of devising something like R sprang up during a hallway conversation. They both wanted technology better suited for their statistics students, who needed to analyze data and produce graphical models of the information. Most comparable software had been designed by computer scientists and proved hard to use.Lacking deep computer science training, the professors considered their coding efforts more of an academic game than anything else. Nonetheless, starting in about 1991, they worked on R full time. “We were pretty much inseparable for five or six years,” Mr. Gentleman said. “One person would do the typing and one person would do the thinking.”

Right from the beginning, we learn that the statistics professors are in general not computer scientists. One can not be the jack of all trades.

A web page, CRAN Task View: High-Performance and Parallel Computing with R, shows that existing R parallelism is mostly confined to single server or laptop. Quoting from our paper

Data mining requires computational resources, sometimes more computational resources than can be provided by their desktop. In a recent study, “Available computing power” was the second most common problem for big data analysis.In addition, the respondents stated that distributed or parallel processing was the least common solution to their big data needs. This could be attributed to the difficulty of processing data with the R language on distributed resources, a challenge we set out to solve with BoscoR.A reason that distributed computing is not seen as a popular solution to big data p rocessing is that scientists are more familiar processing on their desktop than in a cluster environment. R is typically used by people that have not used distributed computing before and do most of their analysis on their local systems with IDEs such as RStudio . Users are unaccustomed to the traditional distributed computing model of batch processing in which there is no interactive access to the running processes.

What R researchers say after using BoscoR

Another quote from the paper:

I was one of the authors who took this interview . Here are more details:During follow up interviews with users after using BoscoR, we received many positive reviews of the framework. Improving the user experience of using R on distributed resources was a primary goal of BoscoR. One example of a positive review was from a Micro-Biology researcher from the University of Wisconsin:I have a huge set of data, which I have to split into pieces to be handled by each node. This is something I can do with the ”grid.apply” function. This reduces the submit time from several hours, to several seconds... it is a phenomenal improvement.This will greatly increase my use of grid computing, as right now, I only use grid computing when I have no other choice.

So, here is deal. Currently, if I want to do something in super-computing high throughput, I use the classical resource manager and I have a several hours barrier. There is something I want to run right now and I have been postponing it.

Days go by and I don't do anything.

So here is what "easier-to-do" means: new habits, pleasure, less tedious work, more creativity, more satisfaction, more joy and feeling more power to do things I, the user, never been able to do before.Now I am able to do with "grid apply" BoscoR the exact same thing I am doing with the classical resource manager currently, I will use BoscoR all the time, every single day.

This is where technology transforms into sentiments in people When I drove a Tesla car, all I felt was a driver exhilaration and forgot about all the technical marvels inside

How BoscoR does it

It all started with the work of Derek Weitzel who created a software called Campus Factory in 2011. This is a distributed high throughput computing framework to link clusters without changing security or execution environments. These were ones of the main stumbling blocks in accessing and doing work on remote clusters.This Campus Factory lead to the idea of Bosco, designed to be run without administrator intervention. It automatically installs the Bosco client on a remote cluster. SSH was chosen as the protocol since it is used nearly universally for cluster access. Jobs are submitted to Bosco, which then submits over ssh to the remote cluster. Input files are transferred over the SSH connection as well. Bosco then monitors and reports the status of the job on the remote cluster as idle, running, or completed. Once the job is completed, Bosco will transfer output files back to the submit host.

|

| Fig. 2: Architecture of Bosco |

Looking at various applications, we discovered R. It is open source and has an estimated two millions plus users. Another author, Dan Fraser traveled to Spain at the R-users conference and discovered an unusual interest for a Bosco version specially made for R users.

We combined Bosco with another module that we updated, called GridR

|

| Fig. 3: GridR input generation |

Now you tell me; isn't it cool?GridR was modified to detect, and if necessary install, R on the remote system. This was performed by a bootstrap process.In the GridR generated submit file, the listed executable to run on the remote system is not R, but the bootstrap executable. The bootstrap executable detects if R is installed on the remote system. If it is installed, it simply executes the user defined function against the input data. If R is not installed, the bootstrap downloads the appropriate version of R for the remote operating system. The supported platforms are identical to Bosco’s, CentOS 5/6 and Debian 6/7. R is downloaded from a central server operated by the OSG’s Grid Operations Center

Job Submissions. Direct versus Glidein

You can read the paper to see the exact definitions, but here is an interesting plot

.jpg) |

| Fig. 4 Comparison of job submission methods |

Direct and glidein submission methods have approximately similar workflow runtimes. But, for short jobs, glidein has significantly shorter workflow runtimes.This can be attributed to the advantages of using a high throughput scheduler over a high performance scheduler.

A future startup?

I am convinced BoscoR can become a great tool to bring science beneficial to society and creating wealth very much like the many successful Silicon Valley startups.

However this depends on the commitment of the engineering potential founders, a delicate issue in Academia

However this depends on the commitment of the engineering potential founders, a delicate issue in Academia

Acknowledgements

- To all the Bosco team members, who made such a difference in working and enjoying my life at the same time

- To Open Science Grid for financing this work

- To all other wonderful people I met at OSG , XSEDE, FermiLab

Comments