The edge computing blues

As a writer I live with unwritten words; words I wanted to say a long time ago. It took me two years to say "Aha"

Edge platform blues

Sunay Tripathi, CTO is an able and respected scientist engineer who describes with professional honesty MobiledgeX blues. Why after three years since creation, their telecom edge platform is not yet mainstream?

... the edge is pretty complicated. For example, we started a couple of years ago, as most do, thinking simply and focusing on latency, but now we see a more interesting problem which incorporates a broader set of issues -- location, provider business model, governmental and privacy regulations, and global operation, to name some. We think that there are multiple edges and the important question is which applications work best on which edge, and how that changes over time.

It’s also taken a while to understand how to work with our parent — Deutsche Telekom, a $90 billion companyThis is a veiled allusion to the tacit pressure the parent exercises to it's off-spring to make some significant revenues

Shooting in the dark

"Shooting in the dark" lottery is the methodology used and it is called "bets"There are lots of issues in trying to use the public cloud within the mobile infrastructure... our advice (and our plan) is to make multiple bets, but to choose those bets carefully. By “bet” we mean some real, nascent business initiative that is possible to pilot and learn from today, a seed that might grow into a big tree.

Artificial Intelligence

From Artificial Intelligence Will Do What We Ask. That’s a Problem.

"The danger of having artificially intelligent machines do our bidding is that we might not be careful enough about what we wish for. The lines of code that animate these machines will inevitably lack nuance, forget to spell out caveats, and end up giving AI systems goals and incentives that don’t align with our true preferences.

A major aspect of the problem is that humans often don’t know what goals to give our AI systems, because we don’t know what we really want.

The model needs intelligence. The intelligence is no longer only cognitive, but to succeed we must consider and develop emotional and social intelligence.

“In a nutshell, intelligence is marked by one’s ability to process and appropriately respond to new and varied stimuli” (Mathew Corbiu, 2020).

Assume we have an agent to tell a not-yet-existing model AI what to do, we will soon realize no one can give exact instructions to the intelligent machine, the machine itself tries to discover what the human wants.

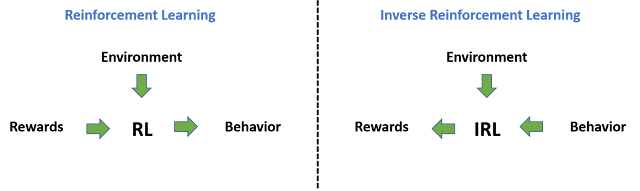

Inverse reinforcement learning (IRL) is the newest field of learning an agent’s objectives, values, or rewards by observing its behavior. The model in a way “psychoanalyzes” the agent. What are the agent objectives? What are his values and rewards he seeks?

|

| RL versus IRL Source Alexandre Gonfalonieri |

“The goal is to learn a decision process to produce behavior that maximizes some predefined reward function. Basically, the goal is to extract the reward function from the observed behavior of an agent.

All these facts are part of Inverse reinforcement learning (IRL), the field of learning an agent’s objectives, values, or rewards by observing its behavior. The model in a way “psychoanalyzes” the agent. What are the agent objectives? What are his values and rewards he seeks?

Someone on Quora asks: Why don’t I just expose myself to the coronavirus and get immunity?

This is another reward that could be modeled, but most probably will clash with the humanitarian and social values.

In the case that one day some artificial intelligence reaches super-human capabilities, IRL might be one approach to understand what humans want and to hopefully work towards these goals.

The ideal edge platform engine

|

| Fig 2: Diagram with hints on how to create an universal edge platform Copyright Ahrono Associates |

In other words, "the goal is to extract the reward function from the observed behavior of an agent" does not happen. The Hackathon is pure isolated serendipity, a bet. It does not scale to achieve significant revenues and wide adoption

The solution is to build the Perfect AI Edge Making Engine. Both ISVs and developers talk to it to clarify what they really want an edge. What rewards, objectives and values are they trying to achieve?

This not trivial, as IRL is the most recent advance in mathematics. Not many practitioners yet, just researchers. The diagram can be a focused brainstorming on how to proceed before the Perfect AI Edge Making Engine becomes reality

Comments