Posts

Showing posts from February, 2015

Revolutionary Cloud Computing e-books from Tim Chou

- Get link

- Other Apps

Enterprise Cloud Adoption. Why is so slow?

- Get link

- Other Apps

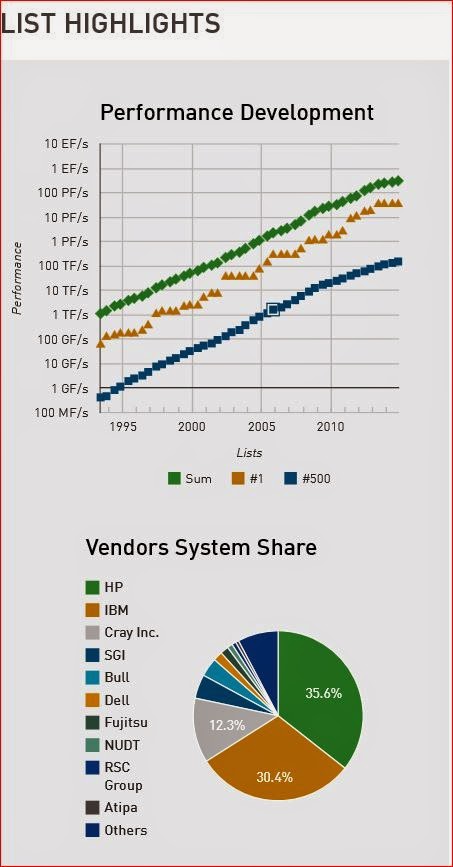

The memorable talk of Dr. Horst Simon at HPC San Francisco Meetup.

- Get link

- Other Apps

Deconstructing Joyent's latest container technology

- Get link

- Other Apps

Tutum is set to dockerize the Enterprise.

- Get link

- Other Apps